tl; dr

- clint-go具有事件聚合的功能,将类似事件分组为一个,并将垃圾邮件过滤应用于事件。

- Event aggregation使用EventAggregatorByReasonFunc生成的聚合密钥作为密钥,如果在10分钟内发布了10次或更多次的事件,而不是发布新事件,而是更新现有的事件以节省ETCD容量。 。

- Spam filtering使用getSpamKey function作为钥匙执行的值。垃圾邮件过滤器使用基于令牌桶算法的速率限制器,最初的25个令牌和每5分钟的加入速率为1令牌。如果事件的发布超出了极限,则该事件被丢弃。

背景

当我想创建一个定制程序,该定制程序检测到Cronjob的儿童工作并执行动作时,我注意到CronjobController(V2)在完成儿童工作完成后发布了一个名为SawCompletedJob的事件。我想:“哦,我可以简单地将事件用作提示。”

但是,当我创建了一个在本地集群中每分钟运行一次的cronjob时,我发现一个锯切的事件从跑步的中间(大约是第10次运行)变得无法观察到。具体来说,这些事件看起来像下面的事件。

$ kubectl alpha events --for cronjob/hello

...

8m29s (x2 over 8m29s) Normal SawCompletedJob CronJob/hello Saw completed job: hello-28023907, status: Complete

8m29s Normal SuccessfulDelete CronJob/hello Deleted job hello-28023904

7m35s Normal SuccessfulCreate CronJob/hello Created job hello-28023908

7m28s (x2 over 7m28s) Normal SawCompletedJob CronJob/hello Saw completed job: hello-28023908, status: Complete

7m28s Normal SuccessfulDelete CronJob/hello Deleted job hello-28023905

6m35s Normal SuccessfulCreate CronJob/hello Created job hello-28023909

6m28s Normal SawCompletedJob CronJob/hello Saw completed job: hello-28023909, status: Complete

2m35s (x3 over 4m35s) Normal SuccessfulCreate CronJob/hello (combined from similar events): Created job hello-28023913

sawcompletedjob事件已发布到6m28s,但之后它们变得无法观察。成功的事件以汇总形式发布,但我们看不到所有这些事件。顺便说一句,可以观察到儿童工作和儿童豆荚中的所有事件。

$ kubectl alpha events

4m18s Normal Scheduled Pod/hello-28023914-frb94 Successfully assigned default/hello-28023914-frb94 to kind-control-plane

4m11s Normal Completed Job/hello-28023914 Job completed

3m18s Normal Started Pod/hello-28023915-5fsh5 Started container hello

3m18s Normal SuccessfulCreate Job/hello-28023915 Created pod: hello-28023915-5fsh5

3m18s Normal Created Pod/hello-28023915-5fsh5 Created container hello

3m18s Normal Pulled Pod/hello-28023915-5fsh5 Container image "busybox:1.28" already present on machine

3m18s Normal Scheduled Pod/hello-28023915-5fsh5 Successfully assigned default/hello-28023915-5fsh5 to kind-control-plane

3m11s Normal Completed Job/hello-28023915 Job completed

2m18s Normal Started Pod/hello-28023916-qbqqk Started container hello

2m18s Normal Pulled Pod/hello-28023916-qbqqk Container image "busybox:1.28" already present on machine

2m18s Normal Created Pod/hello-28023916-qbqqk Created container hello

2m18s Normal SuccessfulCreate Job/hello-28023916 Created pod: hello-28023916-qbqqk

2m18s Normal Scheduled Pod/hello-28023916-qbqqk Successfully assigned default/hello-28023916-qbqqk to kind-control-plane

2m11s Normal Completed Job/hello-28023916 Job completed

78s Normal SuccessfulCreate Job/hello-28023917 Created pod: hello-28023917-kpxvn

78s Normal Created Pod/hello-28023917-kpxvn Created container hello

78s Normal Pulled Pod/hello-28023917-kpxvn Container image "busybox:1.28" already present on machine

78s Normal Started Pod/hello-28023917-kpxvn Started container hello

78s Normal Scheduled Pod/hello-28023917-kpxvn Successfully assigned default/hello-28023917-kpxvn to kind-control-plane

71s Normal Completed Job/hello-28023917 Job completed

18s (x8 over 7m18s) Normal SuccessfulCreate CronJob/hello (combined from similar events): Created job hello-28023918

18s Normal Started Pod/hello-28023918-grvbz Started container hello

18s Normal Created Pod/hello-28023918-grvbz Created container hello

18s Normal Pulled Pod/hello-28023918-grvbz Container image "busybox:1.28" already present on machine

18s Normal SuccessfulCreate Job/hello-28023918 Created pod: hello-28023918-grvbz

18s Normal Scheduled Pod/hello-28023918-grvbz Successfully assigned default/hello-28023918-grvbz to kind-control-plane

11s Normal Completed Job/hello-28023918 Job completed

由于我在Kubernetes官方文档中找不到任何具体描述,因此我通过阅读源代码来弄清楚发生了什么。

。Kubernetes活动出版物流

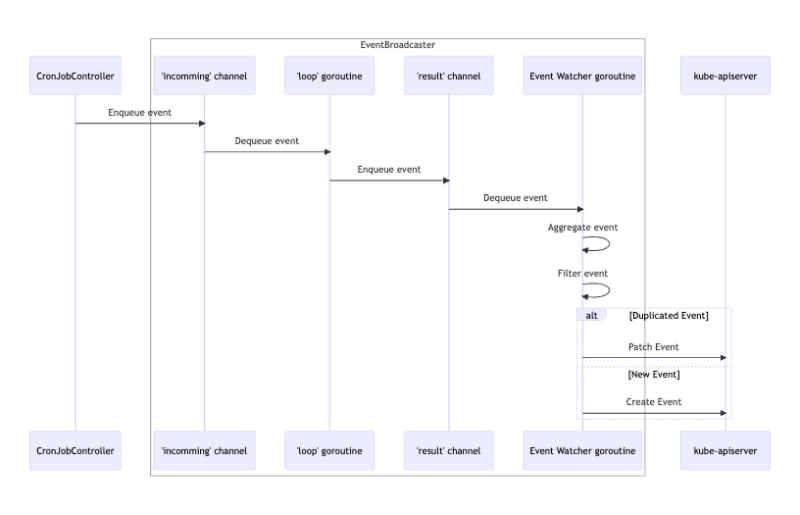

客户端 - 将kubernetes事件发送给Kube-aperver和ETCD存储。客户 - GO负责事件聚合和垃圾邮件过滤,但是客户端将事件发送到Kube-aperver的流程非常复杂,因此我们将首先解释。请注意,我们将以CronjobController的SawCompleteJob事件为例,但请注意,每个控制器的详细信息可能会有所不同。

- CronjobController通过录音机的Eventf method发布了事件。该方法内部致电广播公司的ActionOrDrop method,并将活动发送到即将到来的频道。

- 接入渠道中的事件由the loop goroutine和forwarded to each Watcher's result channel检索。

- 活动观察者Goroutine致电the eventHandler从结果频道中收到的事件。 EventHandler致电recordToSink in the eventBroadcasterImpl,其中EventCorrelator执行事件聚合和垃圾邮件过滤,然后致电recordEvent发布事件(或在事件进行汇总(如果已汇总))。 )。

注意:启动循环goroutine

loop goroutine始于阿比亚奇(the NewLongQueueBroadcaster function),该循环通过the NewBroadcaster function调用。 NewBroadCaster函数在the NewControllerV2 function中调用。

注意:启动活动观察者

cronjobcontroller致电EventBroadCasterImpl的the StartRecordingToSink method,该AbroadCasterImpl从the StartEventWatcher method启动了活动观察者。 StartEventWatcher方法初始化了Watcher,并通过广播公司的the Watch method进行注册。我发现有趣的是,the registration process of the Watcher itself被发送到传入的频道,而the loop goroutine executes it则使事件在观看者开始之前发布之前发布(the comment称其为“可怕的hack”)。

)。EventCorrelator

Event -Correlator实现了Kubernetes事件聚合和垃圾邮件过滤的核心逻辑。 EventCorrelator通过EventBroadCasterImpl的the StartRecordingToSink method中的NewEventCorrelatorWithOptions函数初始化。请注意,E.选择是空的,因此控制器使用the default values。 EventBroadCasterImpl的recordToSink method调用EventCorrelate method,该汇总事件并应用TGE垃圾邮件过滤器。

聚合

EventLogger的EventCorrelator的EventAggregate method和the eventObserve method用于事件聚集。源代码具有详细的注释,因此建议直接引用它以获取更多信息,但这是该过程的简要概述:

- 使用EventAggregatorByReasonFunc计算聚合键和localkey。 gentregationKey由event.source,event.involvedobject,event.type,event.reason,event.ReportingController和event.reportinginstance组成,而localkey是event.message. 。

- 使用gentregationKey搜索EventAggregator的缓存。缓存值是aggregateRecord。如果MaxIntervalinseconds(default 600 seconds)中的局部报告数大于或等于Maxevents(default 10),则将contregationKey作为密钥返回,否则返回the eventKey作为密钥。请注意,EventKey应该是每个事件的唯一。

- eventLogger的the eventObserve method与EventCorrestrate方法返回的密钥,然后搜索EventLogger的缓存。缓存值是eventLog。如果键入缓存(即,键是聚合键),请计算补丁以更新事件。

垃圾邮件过滤

对于垃圾邮件过滤,the filterFunc of EventCorrelator is called将其应用。 FilterFunc的实际实现是EventsourCeoBjectSpamFilter的the Filter method。同样,建议参考源代码以获取详细信息,但这是该过程的简要概述:

- 使用the getSpamKey function。

- 使用EventKey搜索EventsObjectSpamFilter的升高。缓存值是spamRecord,其中包含the rate limiter。 The default values for qps and burst of the rate limiter分别是1/300和25。根据the comment的说法,速率限制器使用令牌桶算法,因此最初有25个令牌,然后每5分钟重新填充一个令牌。

- 致电TokenbucketPassiverAteLimiter的the TryAccept method检查费率限制。如果超过它,discard the Event。

为什么SawCompletedJob事件变得无法观察?

考虑到上述考虑,让我们考虑一下为什么SawCompleteJob事件变得无法观察到的原因。简而言之,这很可能是由垃圾邮件过滤器引起的。

- CronjobController发出三个事件,即成功创作,SawCompleteDjob和成功的删除,每分钟每分钟工作(严格来说,它仅在达到历史记录时才发布成功的删除)。 )。)。

- 控制器使用垃圾邮件过滤器,其键仅基于源和涉及的对象(请参见getSpamKey function)。因此,这些事件类型被确定为同一事件。

- 控制器以每分钟三个令牌的速度消耗前25个令牌。一个令牌每五分钟补充一次,但是大约九分钟,令牌开始耗尽。在那之后,每五分钟重新填充一个toke,但是它被(汇总)成功的事件所消耗,因此SawCompleteDjob和SuccessDelete此后从未发布过。

注意:事件类型

在成功的事件

apiVersion: v1

kind: Event

involvedObject:

apiVersion: batch/v1

kind: CronJob

name: hello

namespace: default

resourceVersion: "289520"

uid: 5f3cfeca-8a83-452a-beb9-7a5f9c1eff63

source:

component: cronjob-controller

...

reason: SuccessfulCreate

message: Created job hello-28025408

SawCompletedJob活动

apiVersion: v1

kind: Event

involvedObject:

apiVersion: batch/v1

kind: CronJob

name: hello

namespace: default

resourceVersion: "289020"

uid: 5f3cfeca-8a83-452a-beb9-7a5f9c1eff63

source:

component: cronjob-controller

...

reason: SawCompletedJob

message: 'Saw completed job: hello-28025408, status: Complete'

成功删除事件

apiVersion: v1

kind: Event

involvedObject:

apiVersion: batch/v1

kind: CronJob

name: hello

namespace: default

resourceVersion: "289520"

uid: 5f3cfeca-8a83-452a-beb9-7a5f9c1eff63

source:

component: cronjob-controller

...

reason: SuccessfulDelete

message: Deleted job hello-28025408